Ever found yourself feeling puzzled by artificial intelligence’s (AI’s) strange, misplaced replies? You’re not alone. These moments have also puzzled me and led to my exploration of a phenomenon known as “hallucination” in AI—instances where the technology utters things that seem completely off-base.

My journey into understanding this digital quirk has revealed some fascinating insights and practical solutions. This article is your guide to understanding AI hallucinations and grounding and offers strategies for staying anchored in reality.

Grounding and Hallucinations in AI [Key Takeaways]

- AI hallucinations happen when an AI tool gives wrong information that seems real. This can be due to poor data, trying too hard to remember details, or not having common sense.

- Grounding AI means teaching an AI using massive amounts of relevant information and giving clear rules. This helps stop hallucinations by keeping the AI focused on truth and facts.

- To keep AI from making mistakes, we use special tricks, such as fine-tuning with better data and checking its work. We also tell AI exactly what job it has to do.

- Making a template for AI helps guide it so it doesn’t make things up. The clearer we are about what we want, the less likely the AI will get things wrong.

- It’s important to check over the work of AIs often. That way, we can catch mistakes before they cause problems. With these steps, AIs can give us helpful and correct answers and are less likely to hallucinate.

What Are Grounding and Hallucinations in AI?

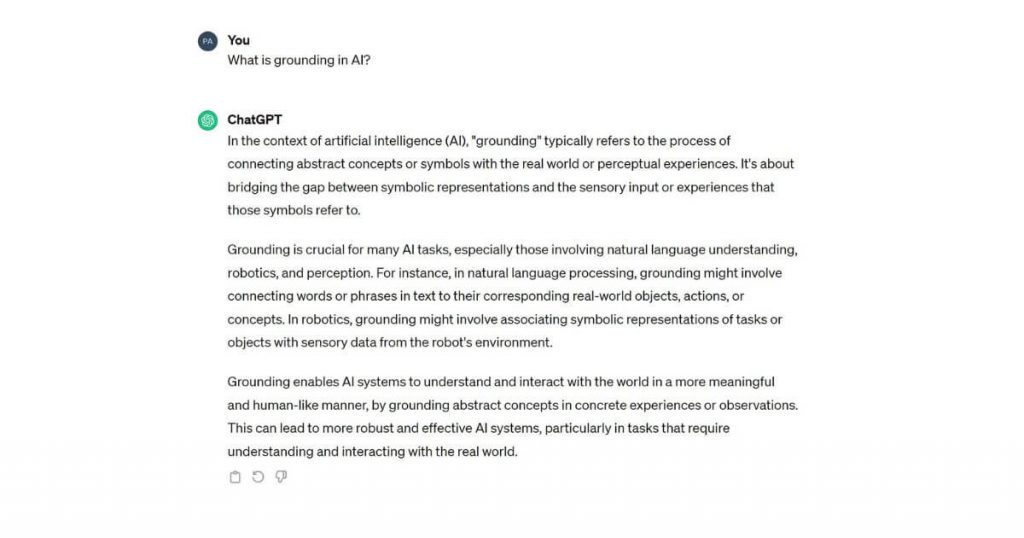

Hallucinations in AI are false results or responses produced by the AI that can be caused by a number of training data issues, a lack of clear instructions to the AI, or other problems. Grounding is used as a solution to this challenge as a grounding system initiates the response to a query/prompt by first retrieving relevant information from a trusted source or system of record and follows this by generating a relevant and more accurate response given better-defined parameters.

Understanding AI Hallucinations

AI hallucinations in large language models (LLMs) refer to the inaccuracies or errors in the output generated by AI systems, often caused by incomplete or irrelevant data sources. These can have a significant impact on decision-making and the overall performance of AI models.

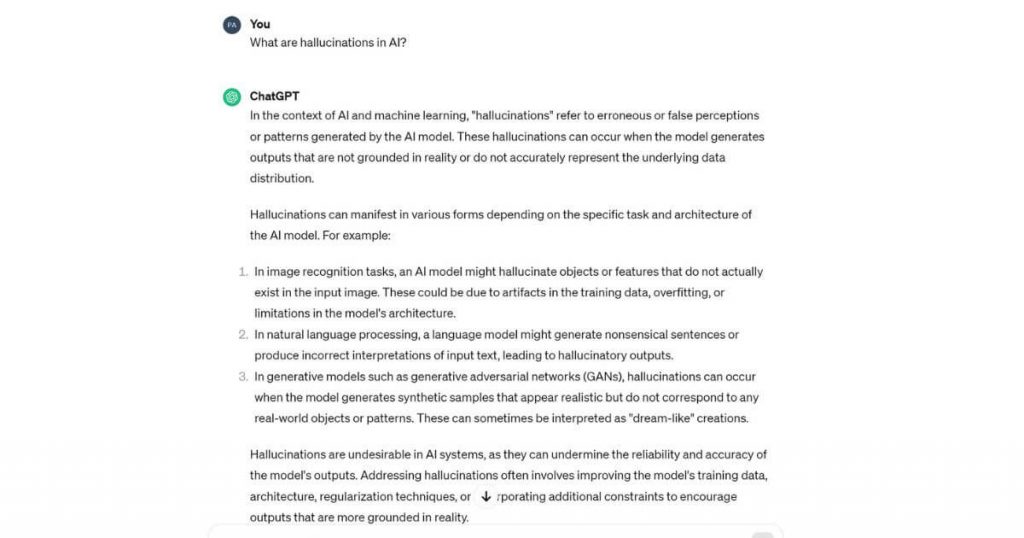

Definition of hallucinations in AI

Hallucinations in AI are a real challenge. Imagine you’re talking to an AI, and it starts making up “facts” that aren’t true. That’s what happens when an AI hallucinates. It isn’t dreaming or seeing things. It’s generating information that seems real but is completely false.

As I dive deeper into this world of generative AI models, I’ve learned how important accuracy is. An LLM sometimes gets confused and generates content that is made-up as if it were factual—a glitch known as hallucination. This can lead to biased or harmful outputs.

Grounding steps in here. It’s a way of keeping AI truthful by ensuring the answers are fact-based and correct. We always aim for a responsible artificial intelligence that knows the difference between reality and fiction—just like we do.

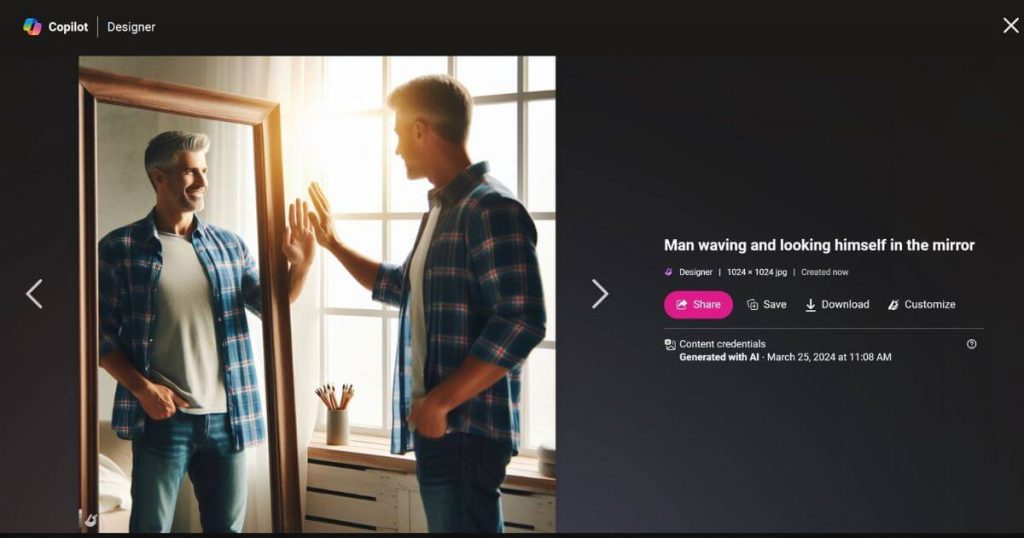

AI hallucination and inaccuracy can happen in images as well. Here’s a vivid example generated with Bing’s Image Creator (arm not in the mirror frame):

Causes of hallucinations

AI can sometimes get things wrong, almost as though it is seeing something that isn’t there. We call these mistakes hallucinations in AI, and they can happen for a few reasons, which include.

- Unreliable training data: If an AI model learns from poor data, it might start making things up. When the AI gets trained with inaccurate or biased information, that’s when you see gibberish come out.

- Overfitting during learning: Sometimes, an AI tool tries too hard to remember every little detail from its training. That can make it get things wrong when seeing new material because it “thinks” too much about the older data.

- Confusing inputs: If we ask AI questions that are quite vague or tricky without providing additional context, it might generate answers that don’t make sense. Clear and specific prompts help keep AI on track.

- Lack of common sense: Despite all their smarts, an AI model generates incorrect information and can miss basic things that humans just know. Without grounding—or sticking to real-world facts—they start to make wild guesses.

- Too much creativity: LLMs like OpenAI’s ChatGPT are good at creating content. However, if we don’t guide them correctly, they might invent facts or stories instead of giving us the truth, especially when they generate text.

Impact of hallucinations

Now, let’s talk about what happens when AI starts seeing things that aren’t there. Hallucinations in LLMs like OpenAI can lead to a range of issues. They might give out false data or create stories that seem real but aren’t. This can lead people who use AI to trust bad information.

Imagine you’re using an AI tool to get answers for your homework, and it gives you made-up facts. Alternatively, think about businesses that rely on AI for decisions. Hallucinations could steer them in the wrong direction.

We need to keep these AIs in check to make sure they stay on track and give us the right information. It’s about keeping our digital helpers honest so we can count on them when it matters most.

Grounding AI

In grounding AI, it is crucial to implement techniques that prevent hallucinations and ensure the AI is working with relevant and specific data sources. This includes creating a template for the AI to follow and giving it a specific role and direction. These steps are essential in mitigating the risk of AI hallucinations and promoting responsible AI use.

Techniques to prevent hallucinations

We can use various techniques and strategies to prevent AI hallucinations, including:

- Fine-tuning the AI model with specific use case data to improve performance in generating patterned and domain-constrained outputs.

- Employing prompt engineering to provide clear instructions and guidance on the task for the generative AI model.

- Implementing Retrieval-Augmented Generation (RAG) that incorporates retrieval-based and generative-based neural networks to counteract limited or incomplete information.

- Utilizing reinforcement learning through adversarial networks or human feedback to avoid hallucinations and enhance the AI system’s generation strategy.

Importance of relevant and specific data sources

Relevant and specific data sources are crucial for grounding AI. Using fine-tuning with specific use case data helps improve AI’s performance. RAG is effective when the AI system has limited information.

Grounding AI requires accurate and detailed data to minimize hallucinations and ensure factual outputs. Proper grounding strengthens the reliability of AI-generated content and enhances its effectiveness in real-world applications, benefiting both developers and end-users alike.

Creating a template for AI to follow

To create a template for AI to follow, we start by curating specific and relevant data sources. This involves selecting datasets that provide accurate information and are less likely to cause the AI to hallucinate.

Additionally, giving the AI a specific role and guidelines helps provide clarity on its function, reducing the likelihood of generating incorrect information. By implementing these steps, we can ensure that the AI has a structured framework to operate within while minimizing the risk of hallucinations.

Giving AI a specific role and direction

Grounding AI requires giving it a specific role and direction. Prompt engineering provides clear instructions to the generative AI model, guiding its task performance. Reinforcement learning can further bolster this by using adversarial networks or human feedback to improve the AI system’s generation strategy, ultimately steering it away from hallucinations and toward accurate outputs.

By providing a clear template for the AI to follow and equipping it with focused guidelines, we can ensure that the AI remains grounded and less prone to generating inaccurate information or hallucinating. These steps help shape the AI’s understanding of its purpose and facilitate its ability to produce relevant content based on reliable data sources.

Implementing verification processes

Implementing verification processes is crucial to ensuring that AI systems produce accurate and reliable outcomes. By incorporating techniques such as RAG and reinforcement learning, we can enhance the system’s ability to cross-verify information from multiple sources, diminishing the likelihood of hallucinations.

Additionally, fine-tuning plays a pivotal role by leveraging domain-specific data to refine the AI model’s outputs, ultimately strengthening its grounding and reducing the probability of inaccuracies. Moreover, as AI enthusiasts, it is vital to recognize that prompt engineering provides clear guidelines for the generative model on what tasks it should perform, guiding it toward precise data retrieval.

You may also find this AI pair intriguing—perplexity and burstiness, so click to learn what it’s about.

Expert Thoughts and Opinions

In the quest to combat AI hallucination, we’ve gathered insights from experts to share their strategies for keeping generative AI models on track. Here’s what they have to say:

“Addressing the challenge of AI hallucination involves implementing robust validation and verification processes within AI models. By incorporating techniques such as prompt engineering and retrieval-augmented generation, we can guide the AI model’s output towards relevant and contextually appropriate responses. Additionally, fine-tuning the model’s parameters and optimizing reinforcement learning algorithms can help mitigate the risk of producing irrelevant outputs.” — Ashwin Ramesh, CEO, Synup

“AI hallucinating… [is akin to] concocting an elaborate story that doesn’t connect to the conversation at hand. It’s a limitation we have to anticipate. The key is striking the right balance – enabling creative flourishes without veering into pure fantasy. There are still gaps in AI comprehension that lead to these hallucinatory tangents. Closing those gaps is one of the next big challenges in building a more grounded, logical AI system.” — Will Yang, Head of Growth and Marketing, Instrumentl

“In order to address the problem of AI hallucination, we implement a comprehensive strategy encompassing rigorous data preprocessing, model validation, and post-processing methodologies. Establishing generative AI models on a solid foundation can be achieved by enforcing stringent input-output constraints throughout the training process.” — Jessica Shee, Senior Tech Editor and Marketing Content Manager, iBoysoft

“By crafting precise, context-rich prompts, we guide our AI to produce relevant, useful content. This method helps minimize those off-the-wall AI creations, steering clear of the “Salvador Dalí on the Starship Enterprise” scenarios. Plus, it ensures our AI keeps delivering the innovative, reliable support our users count on.” — John Xie, Co-Founder and CEO, Taskade

Conclusion

Understanding AI hallucinations is crucial for ensuring the reliability of generative models. Techniques such as grounding and fine-tuning play a vital role in mitigating these issues.

By providing specific data and guiding AI with clear instructions, we can prevent hallucinations and enhance the accuracy of AI-generated outputs.

It’s essential to continue exploring innovative approaches to minimize these challenges as AI technology continues to advance.

Interested in all things AI? Here’s a full list of AI terms and their explanation.

FAQ

Why might an AI give the wrong answer?

Mix-ups may arise from AI hallucinations if the system isn’t properly grounded. In short, it didn’t learn correctly how real-world concepts link to what it is trying to process.

How do we stop AI hallucinations?

To mitigate AI hallucinations, developers work on improving the way machines learn so they truly understand the context and can do spot checks for accurate responses.

Is there a way I can tell if an AI is having hallucination hiccups?

If the information seems off, that’s the clue. Always double-check with credible sources or ask for clarification to ensure you’ve got the correct answer when using AI systems.